- Published on

Knowledge Curation System

- Authors

- Name

- Ayush Bhardwaj

What is a Personalized Knowledge Curation System?

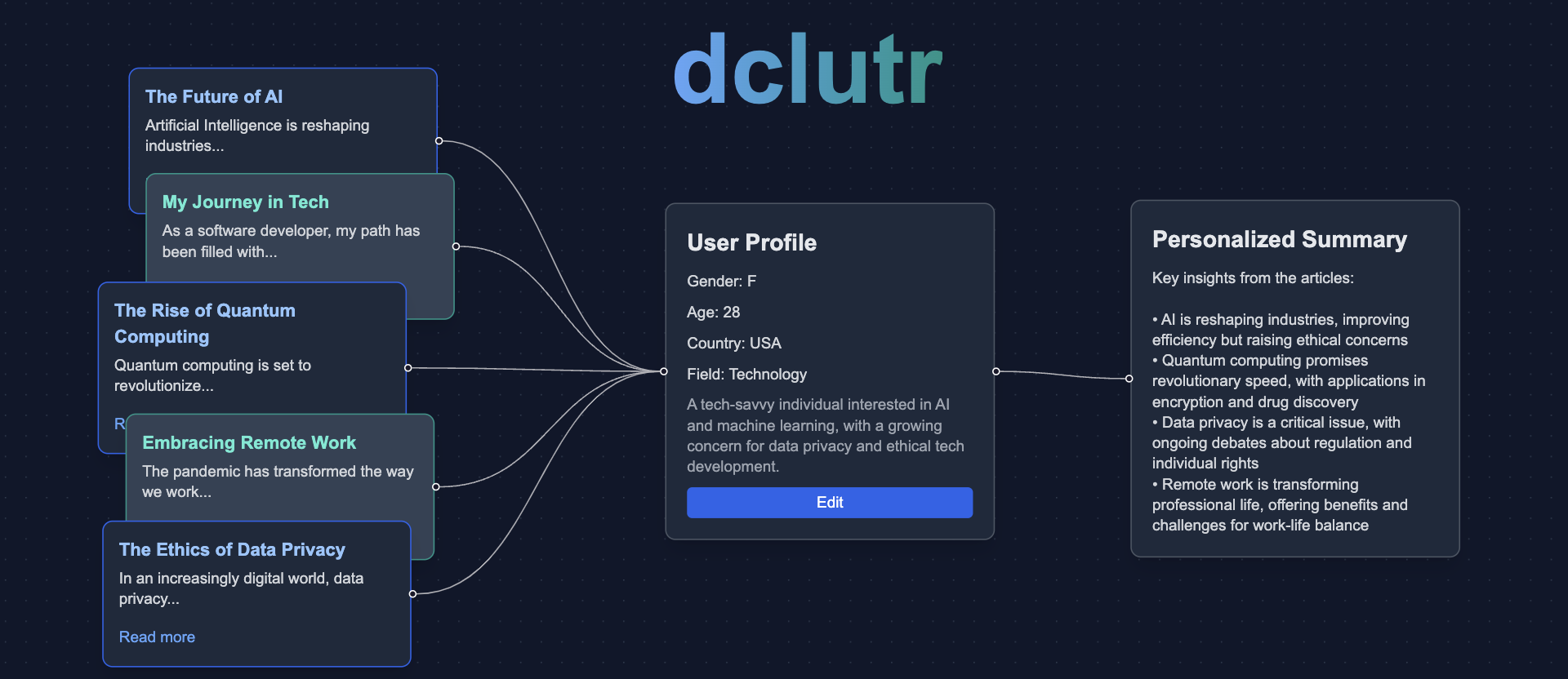

At its core, the system I’m building aims to collect information from diverse sources—be it news outlets, blogs, academic articles, or online discussions—and deliver concise, tailored summaries to individual users. But the goal isn’t just to provide information; it’s to present objective content that respects your ability to think for yourself.

This system isn’t about pushing opinions or reinforcing polarized narratives. Instead, it focuses on distilling essential facts that are relevant to your interests and circumstances. Think of it as an intelligent filter that helps you navigate the flood of information out there without getting bogged down by bias, noise, or unnecessary clutter.

Why Does This Matter?

In an age of information overload, staying informed often comes at the cost of time and mental energy. Scrolling through endless feeds or jumping between conflicting sources can leave you more confused than enlightened. My system addresses these issues by:

- Eliminating Bias: By prioritizing objective data over subjective takes, it empowers users to form their own views rather than adopting those of the content creators.

- Saving Time: You get exactly what you need, whether it’s a quick summary of global events or deep insights into a niche topic, without having to sift through irrelevant or repetitive information.

- Encouraging Critical Thinking: Instead of feeding you narratives, the system presents facts and context, enabling you to analyze and decide what to believe.

Where I Am Right Now

This is still a work in progress, but it’s been an exciting journey so far. Here’s what I’ve been focusing on:

Bias Detection and Correction in AI Systems I am exploring the capabilities of large language models (LLMs) and comparing them to traditional NLP libraries (e.g., spaCy, NLTK, and Gensim) in detecting and correcting bias. LLMs bring advanced context understanding but may also reflect biases from their training data. Traditional NLP tools, while more rule-based, often lack the nuance to address implicit biases effectively.

Talking to People for Feedback I’ve been reaching out to a variety of people to get their thoughts and ideas. Their feedback has been incredibly helpful in improving the idea and spotting areas I might have missed.

Understanding the Challenges

One of the biggest hurdles is sourcing content, especially since much of it is copyrighted and cannot be used freely. Additionally, the training data for LLMs can often include biases, which makes it challenging to ensure fair and balanced results.

The Vision Ahead

This project is a small step towards a larger goal: to democratize access to unbiased knowledge and empower individuals with the tools to think critically.

Join the Conversation

Passionate about AI, news, or this idea? Let's connect: ayushb.bits@gmail.com.